Artificial Intelligence and Singularity

Artificial Intelligence and Singularity

In 1998, Google began its journey with a simple product — a search engine. Soon, Google’s search algorithm started working remarkably well, sparking a massive revolution in the world of the internet.

But Google’s journey didn’t stop there. One after another, they launched an impressive array of new products: Gmail, Google Docs, YouTube, Android, and many more.

Of course, not all of these were invented or started by Google themselves. For some, like YouTube, Google acquired startups and incorporated them into their ecosystem.

From the very beginning, Google’s founders, Larry Page and Sergey Brin, placed great importance on artificial intelligence technology. Over the past decade, they have actively sought out the world’s top AI researchers and recruited them to their company.

To find these experts, they even hired agents whose job was to attend scientific events and conferences to identify talented individuals in the field of artificial intelligence. Anyone Google considered promising was recruited at any cost.

Kurzweil is well known for his concept of the artificial intelligence singularity (AI Singularity). According to this theory, artificial intelligence will surpass human intelligence, leading to a point of technological advancement beyond our current understanding.

Let me share the story of Ray Kurzweil as an example. Kurzweil’s family immigrated to the United States from Europe. In 1965, at just 17 years old, he created a software program capable of mimicking the styles of famous painters to produce new artwork.

This software was a kind of pattern-recognition program—what we might call a precursor to today’s artificial intelligence.

His work earned him national recognition, and he was honored at the White House by President Lyndon B. Johnson.

In 1970, he completed his studies at MIT in the United States and went on to make several remarkable inventions. He invented the first reading technology for the visually impaired, combining a flatbed scanner with optical character recognition.

In 1999, he wrote a book titled The Age of Spiritual Machines, where he explained how artificial intelligence and machine intelligence could transform the future of humanity.

In 2012, Larry Page met with Kurzweil and invited him to join Google. Kurzweil had said, “I want to work somewhere where my research can be turned into real-world applications.” Larry agreed with him.

As a result, Kurzweil began working at Google as the Director of Engineering, focusing primarily on machine learning and natural language processing.

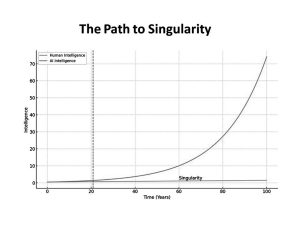

Kurzweil is known for his concept of the artificial intelligence singularity (AI Singularity). According to this theory, artificial intelligence will surpass human intelligence, leading to a stage of technological advancement beyond our current understanding.

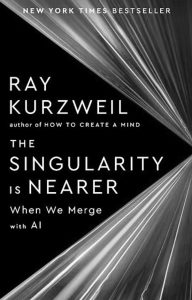

The idea was first proposed around the 1950s by mathematician John von Neumann. Kurzweil popularized the concept further in his 2005 book, The Singularity Is Near.

He predicted that, first, by 2029, artificial intelligence would achieve human-level intelligence; and second, by 2045, the singularity would occur. At that point, AI would vastly surpass human intelligence, resulting in a merging of humans and machines.

The key features of the singularity are: first, artificial intelligence will improve itself to create even more powerful AI. Second, the human brain and artificial intelligence will merge to form a new kind of intelligence. Third, these changes will have fundamental and unpredictable impacts on human society.

Kurzweil believes that technological progress follows an exponential growth rate, meaning it increases geometrically over time. He says that AI-related technologies will advance so rapidly that artificial intelligence will soon surpass human intelligence.

The concept of singularity remains a subject of debate among scientists and technologists. While many support the idea, figures like Stephen Hawking and Elon Musk have warned about its potential risks.

By the way, since Kurzweil’s first book was published, AI technology has advanced so much that in 2024 he released another book titled The Singularity Is Nearer. In this new book, he presents new evidence and real progress supporting his earlier predictions.

In other words, Kurzweil’s predictions about the singularity are unfolding as expected, and it may soon become a reality.

Kurzweil’s concept of the ‘singularity’ has sparked both widespread interest and curiosity, as well as debate among many scientists, technologists, and philosophers. Why so much controversy? Let’s take a closer look.

Excessive Optimism

Kurzweil believes that technological progress follows an exponential growth rate, meaning it increases geometrically over time. He argues that AI-related technologies will advance so rapidly that artificial intelligence will soon surpass human intelligence.

However, Kurzweil’s critics say that not all technologies progress as rapidly as he claims. In many cases, progress is slow, limited, or hindered by ethical and social challenges. For example, despite long-standing research on curing cancer or brain-computer interfaces, practical applications remain limited.

Complexity of the Human Brain

Kurzweil and like-minded scientists believe it is possible to create machines that fully emulate the functioning of the human brain. It is true that artificial intelligence technology began by mimicking how the human brain works.

However, scientists point out that the human brain’s sensations, emotions, consciousness, ethics, and experiences are not just models of neurons but are composed of many complexities that are still not fully understood—and perhaps never will be completely discovered.

This perspective cannot be dismissed, as the brain is extremely complex. Notably, Michio Kaku, an American theoretical physicist, has described the human brain as “the most complex object in the solar system.” In an interview, he stated, “We know less than 5% of how the human brain actually functions.”

Risks of Safety and Control

According to the concept of singularity, artificial intelligence will become so powerful that it will be able to make decisions without human instructions. If this happens, there is a possibility that AI could go beyond human control.

Many experts, including Stephen Hawking, Elon Musk, and Bill Gates, have warned that if AI one day gains the ability to make its own decisions, it could establish control over humanity or unintentionally cause harm.

While Kurzweil views AI as a helpful tool, his critics see it as a potential existential threat.

The human brain’s sensations, emotions, consciousness, ethics, and experiences are not merely models of neurons but are composed of many complexities that are still not fully understood and may never be completely discovered.

Ethics and Social Inequality

If artificial intelligence technology remains accessible only to the wealthy, it could create a new form of inequality in society. Moreover, with the merging of humans and machines, questions arise about what “humanity” will truly mean. The concept of human-machine integration refers to people augmenting their bodies with various devices to enhance their abilities.

We are already witnessing an invisible struggle between the Western world and China for dominance in artificial intelligence technology. Wealthy nations aim to control this technology to expand their influence.

While the singularity concept is highly inspiring in terms of technological potential, its implementation raises many scientific, ethical, and philosophical questions and concerns. Some see it as a dream, while others regard it as a warning.

Kurzweil, however, views the matter differently. He believes AI will be a collaborator for humanity, enhancing our capabilities and improving quality of life. Still, many consider his perspective overly optimistic.